Computer processors are designed to handle pretty much anything. However, CPUs are very restricted and as such, can only perform certain mathematical calculations. Highly complicated combinations are off the table due to very long processing time. Graphics cards, on the other hand, have become so specialized that they surpass traditional processors when it comes to rendering large amounts of complex calculations.

Some examples include pedestrian detection for autonomous driving, medical imaging, supercomputing and machine learning. This comes as no surprise, because GPUs offer 10 to 100 times more computational power than traditional CPUs, which is one of the main reasons why graphics cards are currently being used to power some of the most advanced neural networks responsible for deep learning.

What exactly makes GPUs ideal for deep learning?

The most tech-savvy reader might think that it has to do with parallelism, but you would be wrong my friend. The real reason is simpler than that and has to do with memory bandwidth. CPUs are capable of fetching small packages of memory quickly whereas GPUs have a high latency which makes them slower at this type of work. But GPUs are ideal when it comes to fetching very large amounts of memory and the best GPUs can fetch up to 750GB/s, which is huge when you compare it to the best CPU which can handle only up to 50GB/s memory bandwidth. But how do we overcome the latency issues?

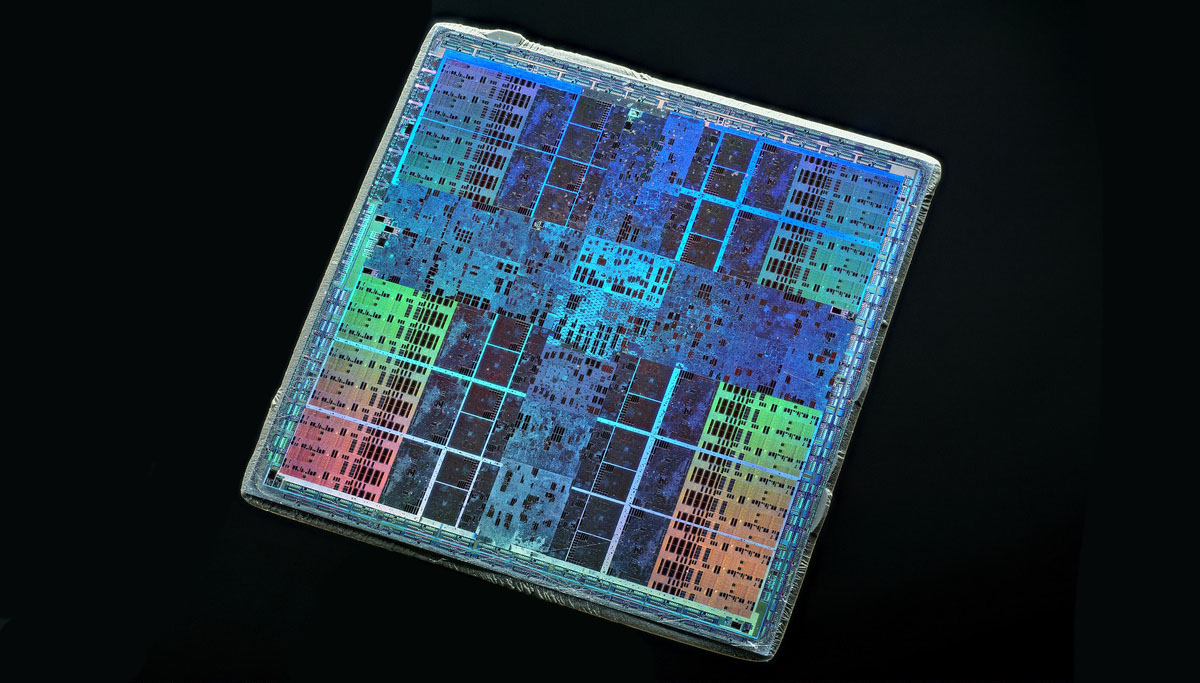

Image source: NVidia.com

Simple, we use more than one processing unit. GPUs are comprised of thousands of cores unlike CPUs and to solve a task involving large amounts of memory and matrices, you would only have to wait for the initial fetch to take place. Every subsequent fetch will be significantly faster, due to the unloading process taking so much time that all the GPU have to queue in order to continue the unloading process. With so much processing power, the latency is effectively masked in order to allow the GPU to handle high bandwidth. This is called thread parallelism and it’s the second reason why GPUs outperform traditional CPUs when it comes to deep learning.

The third reason is not that important performance-wise, but it does offer an additional insight into GPUs’ undeniable supremacy over CPUs. The first part of the process involves fetching memory from the main or RAM memory and transferring it over to on-chip memory, or the L1 cache (instruction memory) and registers. Registers are attached directly to the execution unit, which for GPUs is the stream processor and for CPUs the core. This is where all the computation happens. Normally, you’d want both L1 and register memory to be as close to the execution engine and allow for a quick access by keeping the memories small. The larger the memory, the more time you need to access it.

What makes graphic cards so beneficial is that every processing unit can have a small packet of registers allowing for the aggregate registers size to exceed CPUs by more than 30 times and still be twice as fast. This results in up to 14mb reserved for register memory operating at 80TB/s. The average CPU L1 cache operates at no more than 5TB/s and register rarely goes over 128KB and operate at 10, maybe 20TB/s. Although register operates quite differently when compared to the GPU registers, this difference in caching size is far more important than the speed difference.

GPUs are capable of storing large amounts of data in the L1 cache and register files in order to reuse convolutional and matrix multiplication tiles. The best matrix multiplication algorithms only use 2 tiles ranging from 64×32 to 96×64 numbers for 2 L1 cache matrices. Register tile use 16×16 to 32×32 number, for output sums per single thread block. One block is equal to 1024 threads, and you have 8 thread blocks for every stream processor and 60 stream processors in the GPU.

For example, a 100MB matrix can easily be broken apart into smaller matrices which could then fit into the cache and registers. You can then perform matrix multiplication using three matrix tiles and achieve speeds ranging from 10 to 80TB/s, which is incredibly fast. This is the last nail in CPUs coffin regarding deep learning, as GPUs are simply outperforming them on every level.

Any capable IT consulting company will tell you that GPUs are best-suited for deep learning due to high-bandwidth memory, using thread parallelism to hide the latency and easily programmable L1 memory and registers. Although specialized processors have been around for quite some time, making one that is easily accessible for various applications besides graphics was rather difficult. This means that the developers had to write code for each processor individually. However, with the demand for open standards regarding accessing hardware such as GPU, it’s safe to say that we will use graphics cards for alternative tasks more than ever before.

If you liked this article, follow us on Twitter @themerklenews and make sure to subscribe to our newsletter to receive the latest bitcoin, cryptocurrency, and technology news.